POSITRON

Accelerating Intelligence

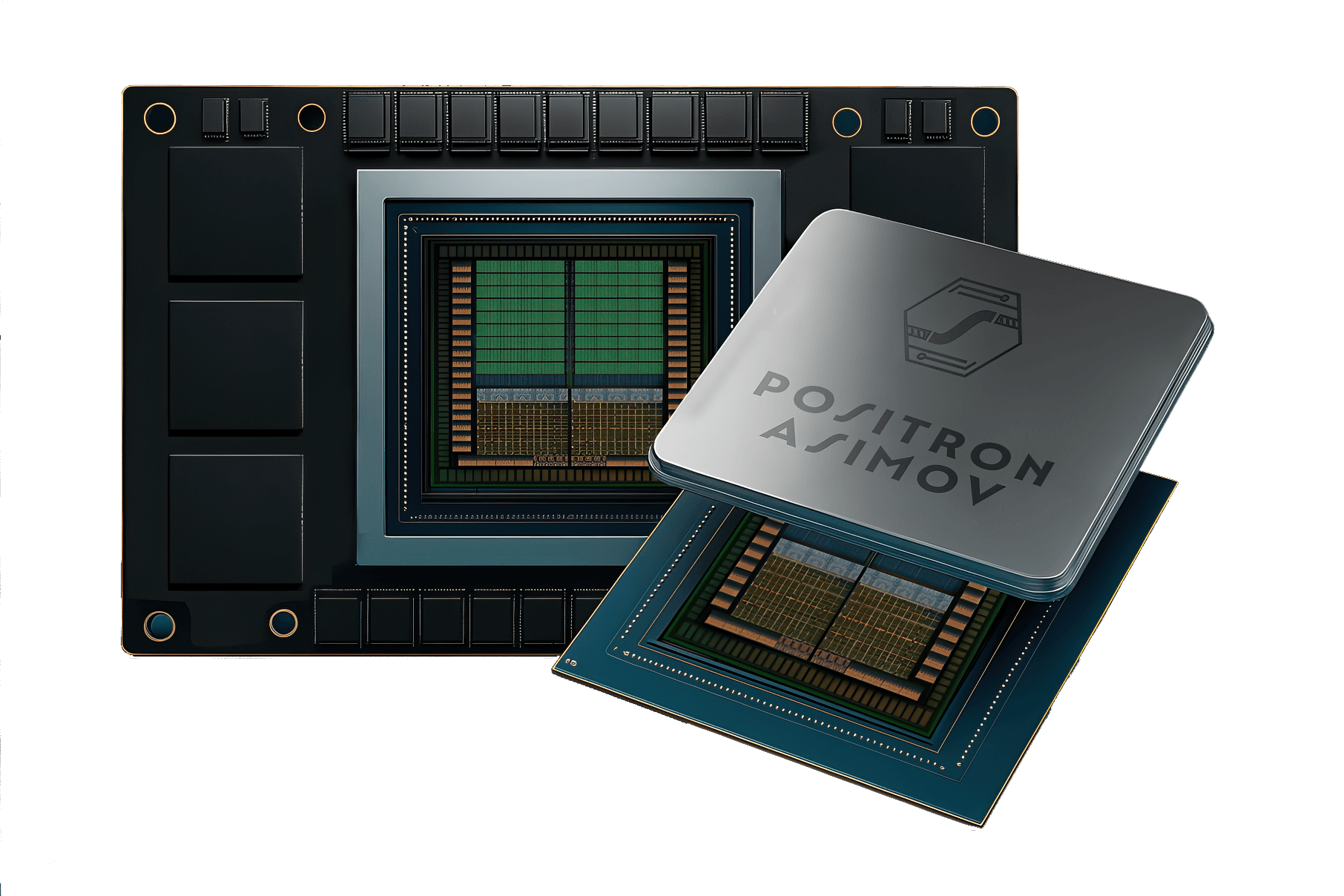

Purpose-built hardware for the age of generative AI

Delivering the highest performance, lowest power, and best TCO for Transformer model inference at any scale.

Our Products

Production-ready inference appliance supporting up to 500B Parameter Models

Superintelligence-in-a-Box with 8TB+ memory, powered by 4x Asimov chips

Purpose built AI Inference accelerator silicon with 2TB+ memory per chip

The Positronic Brain

Our worldview on AI infrastructure and the future we're building

Every Transformer Runs on Positron

Supports all Transformer models

seamlessly with zero time and zero effort

Positron maps any trained HuggingFace Transformers Library model directly onto hardware for maximum performance and ease of use

.pt

.safetensors

Develop or procure a model using the HuggingFace Transformers Library

Drag & Drop to Upload

or

Upload or link trained model file (.pt or .safetensors) to Positron Model Manager

from openai import OpenAI

client = OpenAI(uri="api.positron.ai")

client.chat.completions

.create(

model="my_model"

)Update client applications to use Positron's OpenAI API-compliant endpoint