POSITRON

Accelerating Intelligence

with Hardware for Transformer Model Inference

Purpose Built Generative AI Systems

Positron delivers the highest performance, lowest power, and best total cost of ownership solution for Transformer Model Inference.

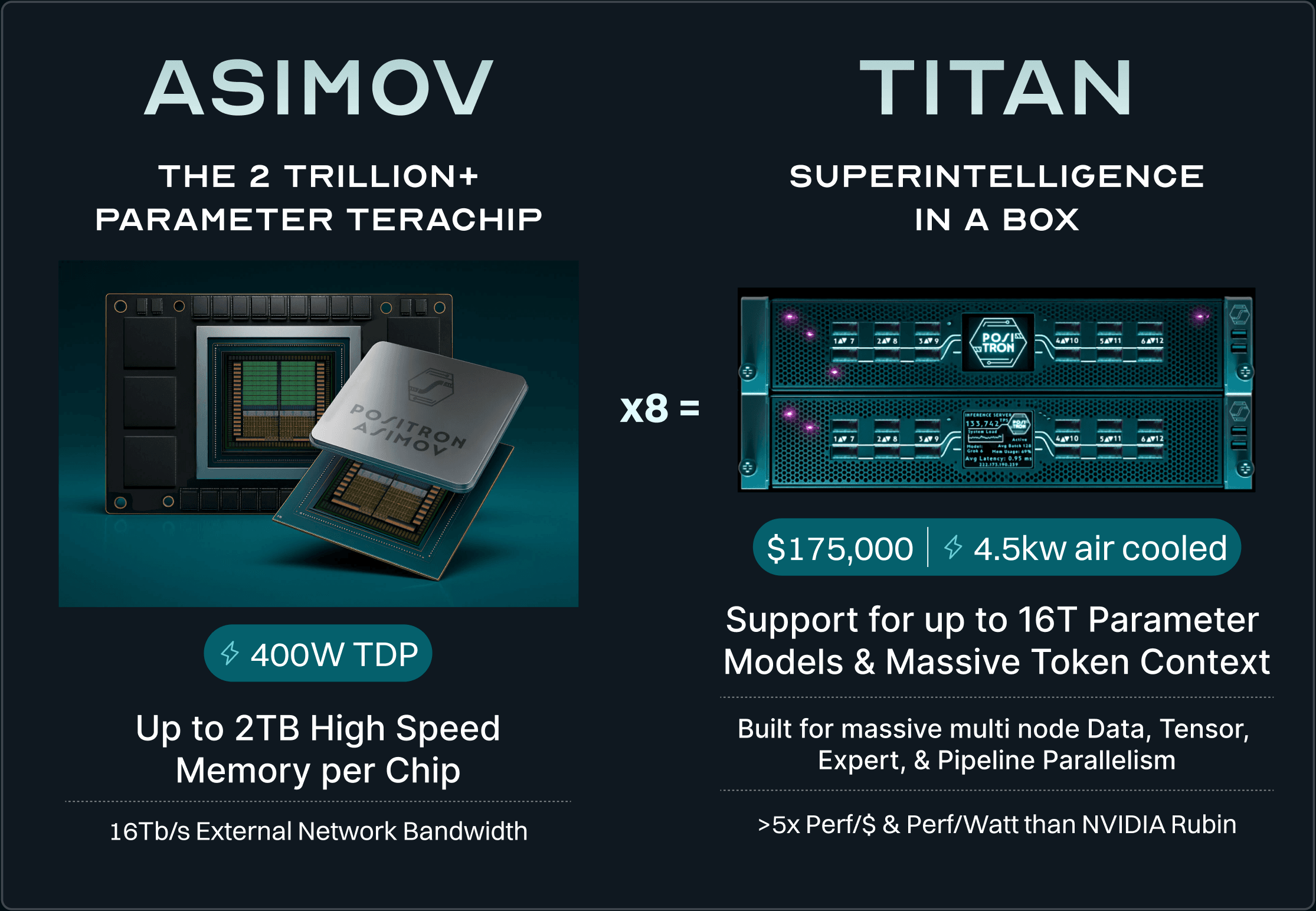

2025

2026

Head to Head Systems Comparison

(Llama 3.1 8B with BF16 compute, no speculation or paged attention)

Positron delivers leading Performance per Dollar and Performance per Watt compared to NVIDIA

01

NVIDIA DGX H200

System Power ⚡ 5900W

182.00

Tokens/sec/User

Perf/Dollar: 1.00x

Perf/Watt: 1.00x

02

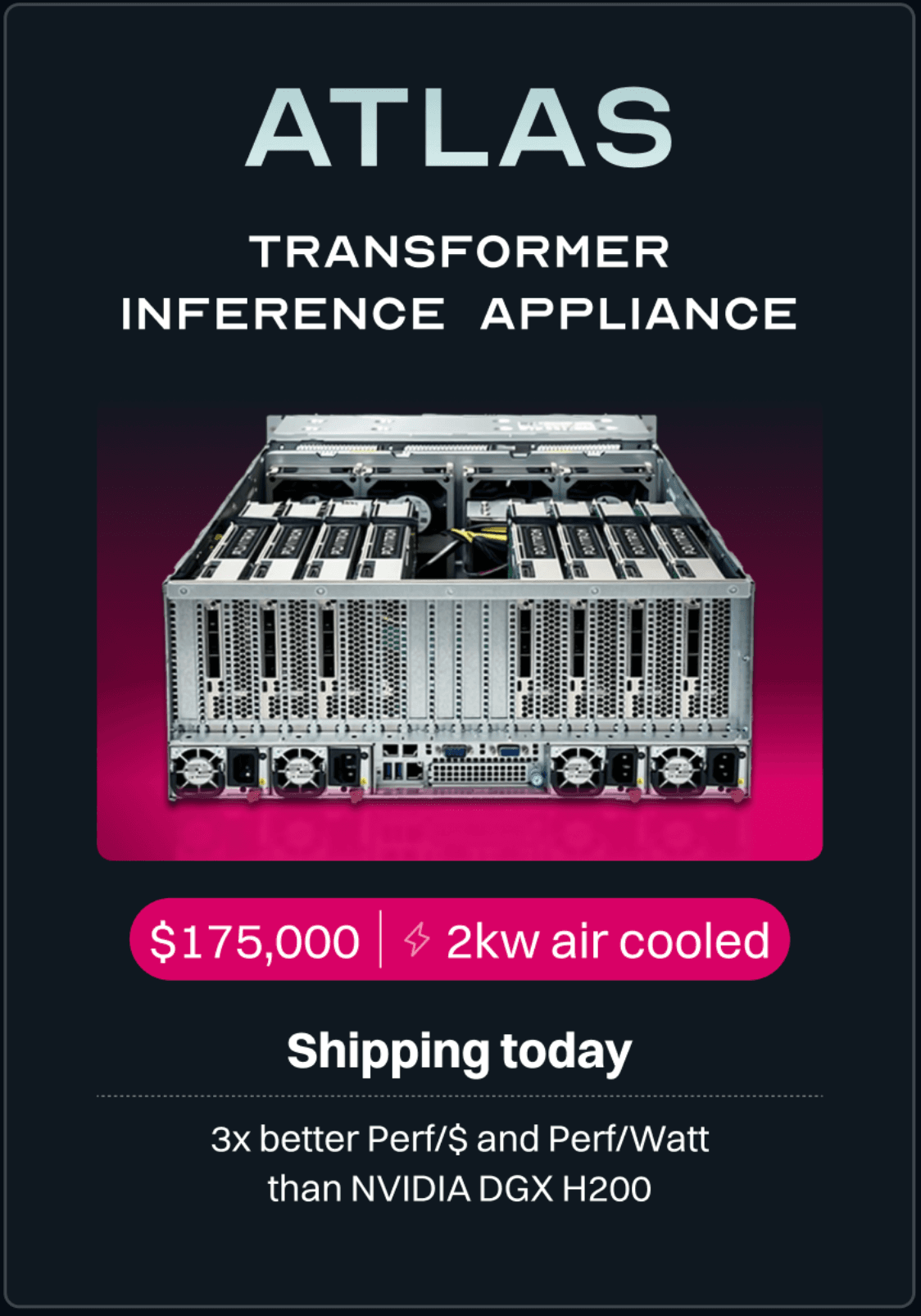

Positron Atlas

System Power ⚡ 2000W

280.00

Tokens/sec/User

Perf/Dollar: 3.08x

Perf/Watt: 4.54x

Every Transformer Runs on Positron

Supports all Transformer models

seamlessly with zero time and zero effort

Positron maps any trained HuggingFace Transformers Library model directly onto hardware for maximum performance and ease of use

Step 1

.pt

.safetensors

Develop or procure a model using the HuggingFace Transformers Library

Step 2

Drag & Drop to Upload

or

Upload or link trained model file (.pt or .safetensors) to Positron Model Manager

Step 3

from openai import OpenAI

client = OpenAI(uri="api.positron.ai")

client.chat.completions

.create(

model="my_model"

)Update client applications to use Positron's OpenAI API-compliant endpoint